Components

Tape Library

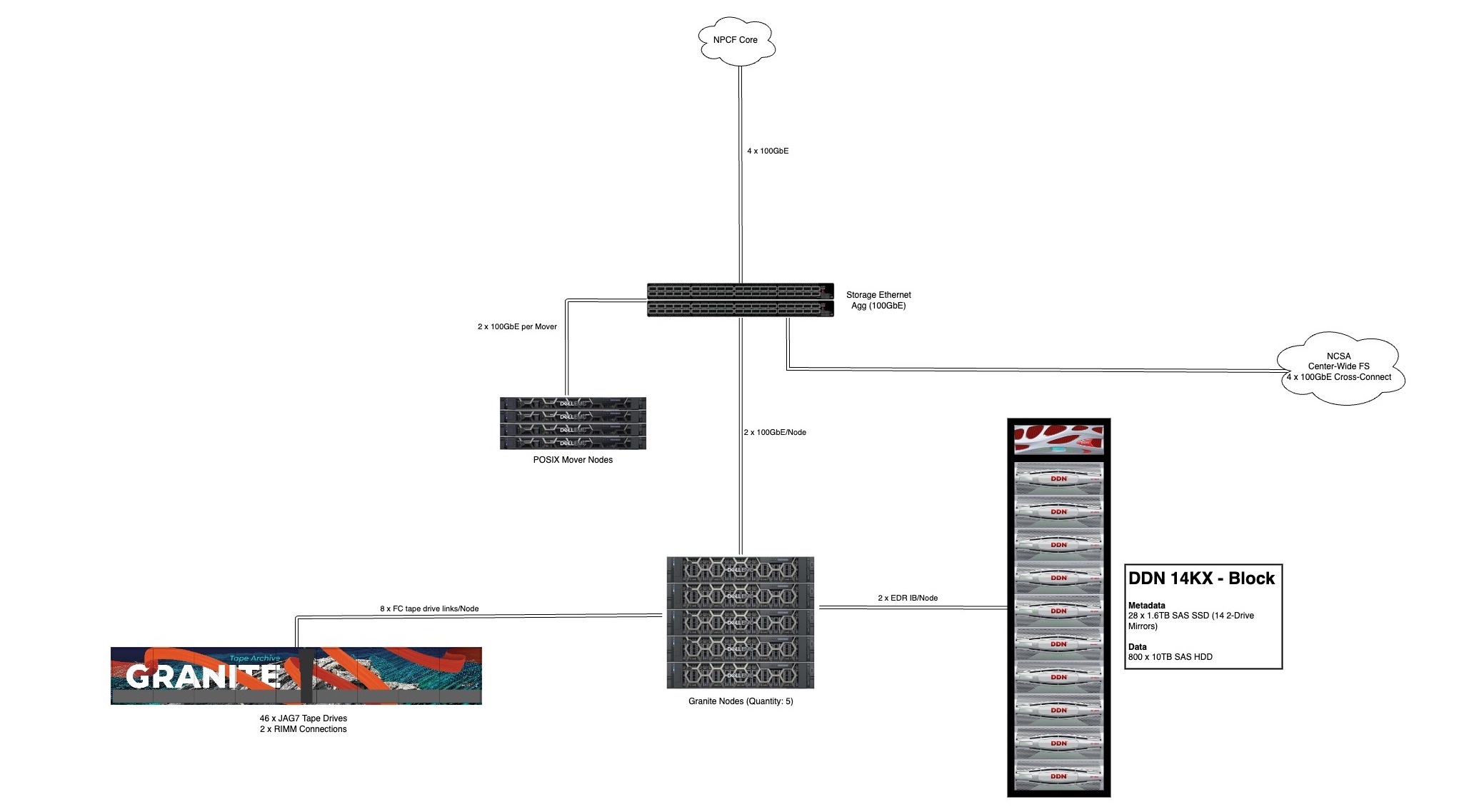

Granite is made up of a single Spectra TFinity library. This 19-frame Spectra T-Finity library; this Frame library is currently capable of holding ~32PB ~65PB of replicated storage. Currently 30 tape drives are being leveraged to read/write data from/to the archive. There are four nodes that storage within the unit, leveraging 30 TS1140 tape drives to transfer data to over 9000 IBM 3592-JC tapes.

Granite Mover Nodes

Four nodes currently form Granite's primary infrastructure. They each connect to 8 tape drives (though two sacrifice one tape drive to host the quip interface for library control). These machines also connect to two NetApp E2600 couplets, one that houses archive metadata, and the other that holds data in the form of a disk cache. Each Granite node is connected to each NetApp twice (once to each of the unit's controllers). Each server infrastructure; Each node connects over direct Fiber Channel connections to 8 tape interfaces on the tape library(30 Drives - 2 Robotic controllers).

Each Granite node is also connected at 2 x 100Gb 2x100GbE to the SET aggregation switch that in turn uplinks at 2 x 100GbE to which allows also allows a 2x100GbE the NPCF core network.

Data Mover Nodes

. This is also leveraged as the NFS mount point that will server Globus data movers and other mount points to access the archive.

Disk Cache

The archive disk cache is where all data will need to land to be ingested or extracted from the archive. This is currently made up of a DDN SFA 14KX unit with a mix of SAS SSDs (metadata) and SAS HDDs (capacity), putting ~2PB of disk cache in front of the archive.

Cluster Export Nodes

The Granite shares its data movers with Taiga. This allows for quicker and more direct Globus transfers between Taiga and Granite. The tape archive is mounted via NFS on to the Globus mover export nodes directly so they have direct access to the archive. Since Granite shares its export nodes with Taiga, this allows for quicker and more direct Globus transfers between Taiga and Granite.