| HTML |

|---|

<div style="background-color: yellow; border: 2px solid red; margin: 4px; padding: 2px; font-weight: bold; text-align: center;">

The HAL documentation has moved to <a href="https://docs.ncsa.illinois.edu/systems/hal/">https://docs.ncsa.illinois.edu/systems/hal/</a>. Please update any bookmarks you may have.

<br>

Click the link above if you are not automatically redirected in 7 seconds.

</br>

</div>

<meta http-equiv="refresh" content="7; URL='https://docs.ncsa.illinois.edu/systems/hal/en/latest/new-user.html'" /> |

Step 1. Apply for a User Account

...

By clicking "Submit Form", you only complete the FIRST form, please click "HERE" to complete the second form.

...

New user needs to set up his/her DUO device via https://duo.security.ncsa.illinois.edu/.

If there has any problem, See https://go.ncsa.illinois.edu/2fa

for common questions and answers. Send an email to

help+duo@ncsa.illinois.edu for additional help.

...

| Code Block |

|---|

|

ssh <username>@hal.ncsa.illinois.edu |

or

| Code Block |

|---|

|

ssh <username>@hal-login2.ncsa.illinois.edu |

Interactive jobs

Starting an interactive job

Using original slurm command

| Code Block |

|---|

|

srun --partition=debug --pty --nodes=1 --ntasks-per-node=16 --cores-per-socket=4 \

--threads-per-core=4 --sockets-per-node=1 --mem-per-cpu=1200 --gres=gpu:v100:1 \

--time 01:30:00 --wait=0 --export=ALL /bin/bash |

Using slurm wrapper suite command

Keeping interactive jobs alive

Interactive jobs cease when you disconnect from the login node either by choice or by internet connection problems. To keep an interactive job alive you can use a terminal multiplexer like tmux.

You start tmux on the login node before you get an interactive slurm session with srun and then do all the work in it.

In case of a disconnect, you simply reconnect to the login node and attach to the tmux session again by typing:

or in case you have multiple session running:

| Code Block |

|---|

|

tmux list-session

tmux attach -t <session_id> |

Batch jobs

submit jobs with original slurm command

| Code Block |

|---|

|

#!/bin/bash

#SBATCH --job-name="demo"

#SBATCH --output="demo.%j.%N.out"

#SBATCH --error="demo.%j.%N.err"

#SBATCH --partition=gpu

#SBATCH --time=4:00:00

#SBATCH --nodes=1

#SBATCH --ntasks-per-node=16

#SBATCH --sockets-per-node=1

#SBATCH --cores-per-socket=4

#SBATCH --threads-per-core=4

#SBATCH --mem-per-cpu=1200

#SBATCH --export=ALL

#SBATCH --gres=gpu:v100:1

srun hostname |

submit jobs with slurm wrapper suite

| Code Block |

|---|

|

#!/bin/bash

#SBATCH --job-name="demo"

#SBATCH --output="demo.%j.%N.out"

#SBATCH --error="demo.%j.%N.err"

#SBATCH --partition=gpux1

#SBATCH --time=4

srun hostname |

submit a job with multiple tasks

| Code Block |

|---|

|

#!/bin/bash

#SBATCH --job-name="demo"

#SBATCH --output="demo.%j.%N.out"

#SBATCH --error="demo.%j.%N.err"

#SBATCH --partition=gpux1

#SBATCH --time=4

mpirun -n 4 hostname &

mpirun -n 4 hostname &

mpirun -n 4 hostname &

mpirun -n 4 hostname &

wait |

For detailed SLURM on HAL information, please refer to Job management with SLURM.

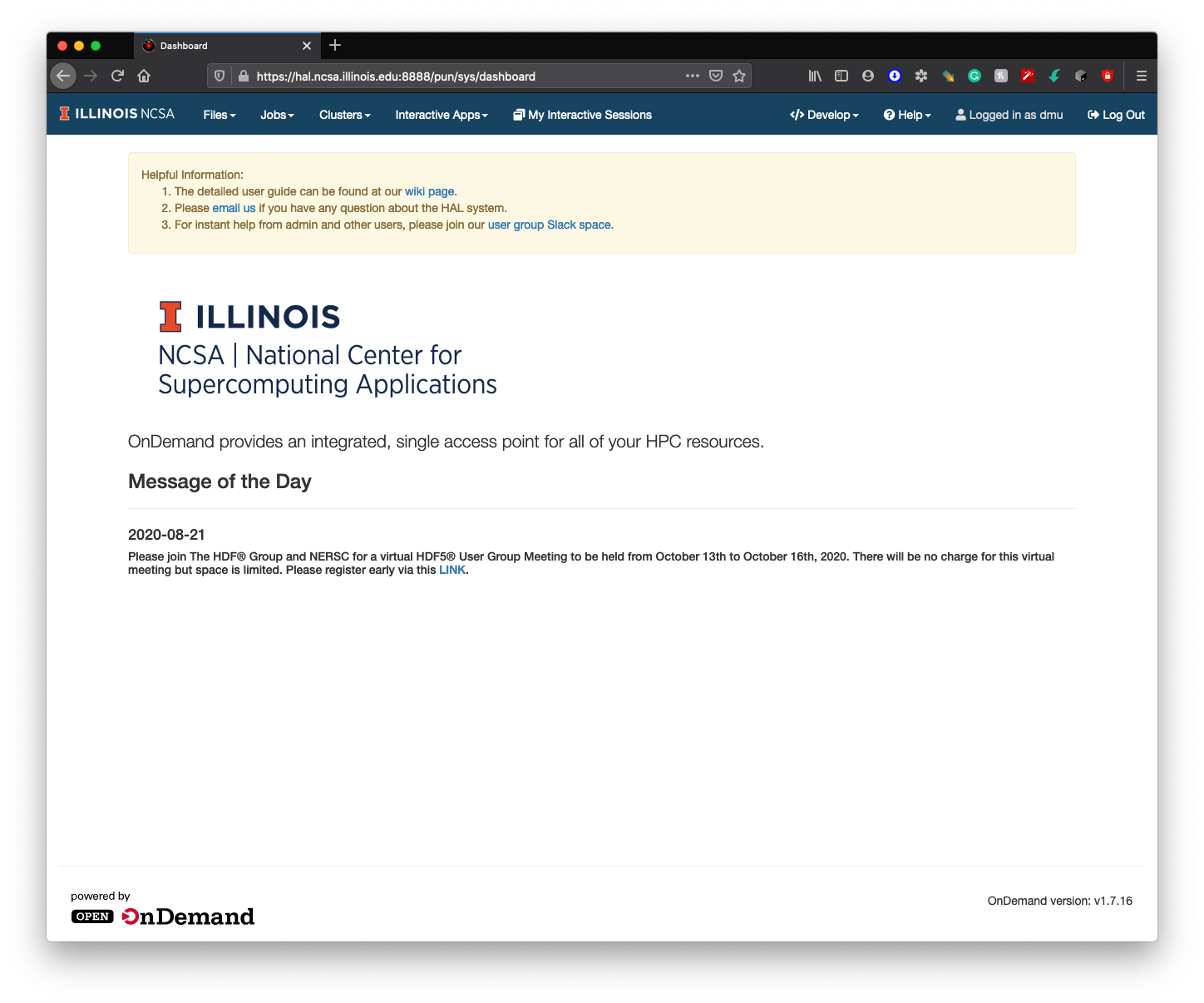

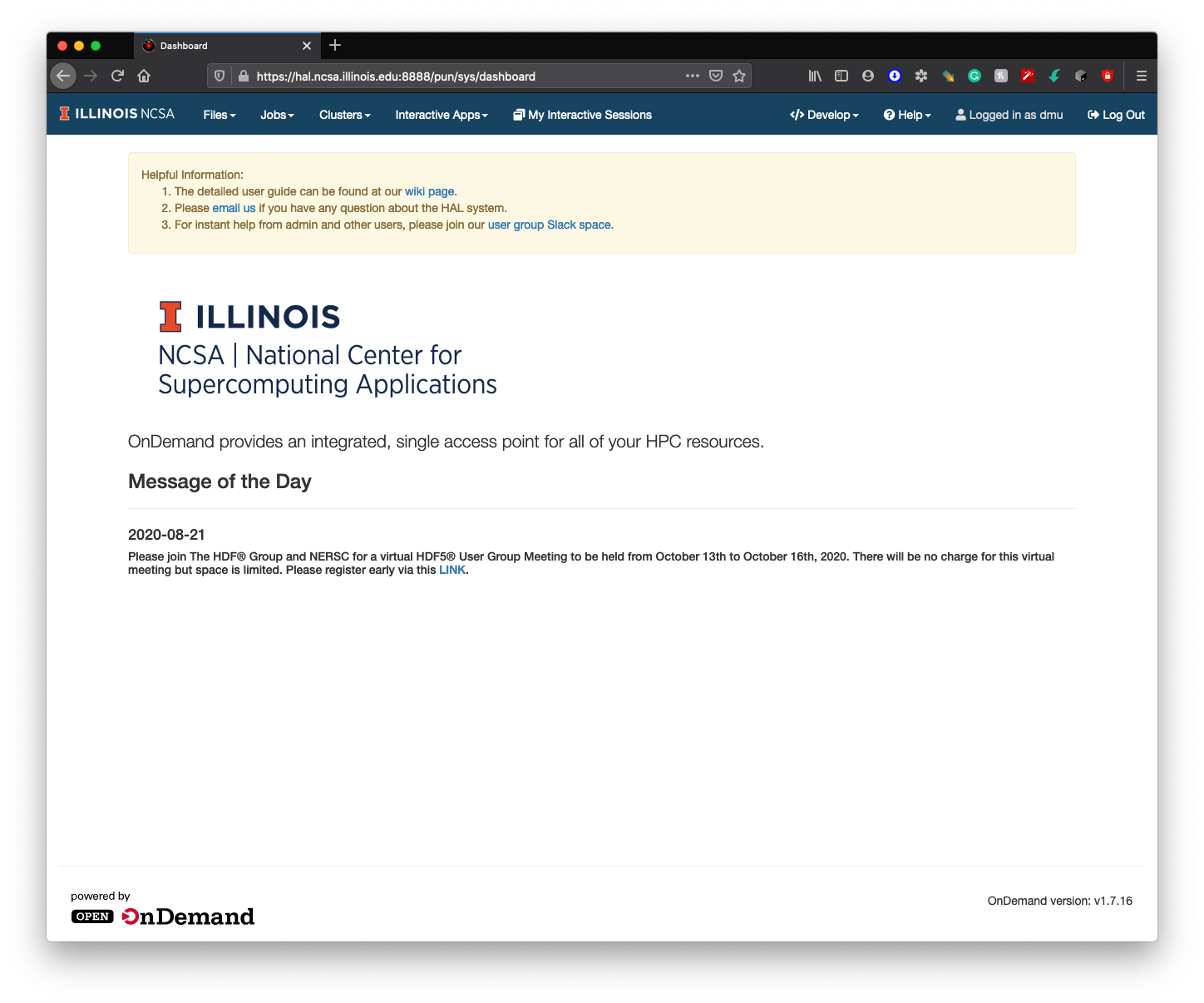

Step 4. Log on HAL System with HAL OnDemand

New users need to log in to the HAL system via "ssh hal.ncsa.illinois.edu" first to initialize their home folders. After new users initialization, HAL OnDemand can be accessed through

| Code Block |

|---|

| language | bash |

|---|

| title | HAL OnDemand |

|---|

|

https://hal-ondemand.ncsa.illinois.edu:8888 |

Image Added

Image Added

For detailed HAL OnDemand information, please refer to Getting started with HAL OnDemand.