| HTML |

|---|

<div style="background-color: yellow; border: 2px solid red; margin: 4px; padding: 2px; font-weight: bold; text-align: center;">

The HAL documentation has moved to <a href="https://docs.ncsa.illinois.edu/systems/hal/">https://docs.ncsa.illinois.edu/systems/hal/</a>. Please update any bookmarks you may have.

<br>

Click the link above if you are not automatically redirected in 7 seconds.

</br>

</div>

<meta http-equiv="refresh" content="7; URL='https://docs.ncsa.illinois.edu/systems/hal/en/latest/user-guide/running-jobs.html'" /> |

| Table of Contents |

|---|

For complete SLURM documentation, see https://slurm.schedmd.com/. Here we only show simple examples with system-specific instructions.

...

The HAL Slurm Wrapper Suite was designed to help users use the HAL system easily and efficiently. The current version is "swsuite-v0.14", which includes

srun → srun (slurm command) → swrun : request resources to run interactive jobs.

sbatch → sbatch (slurm command) → swbatch : request resource to submit a batch script to Slurm.

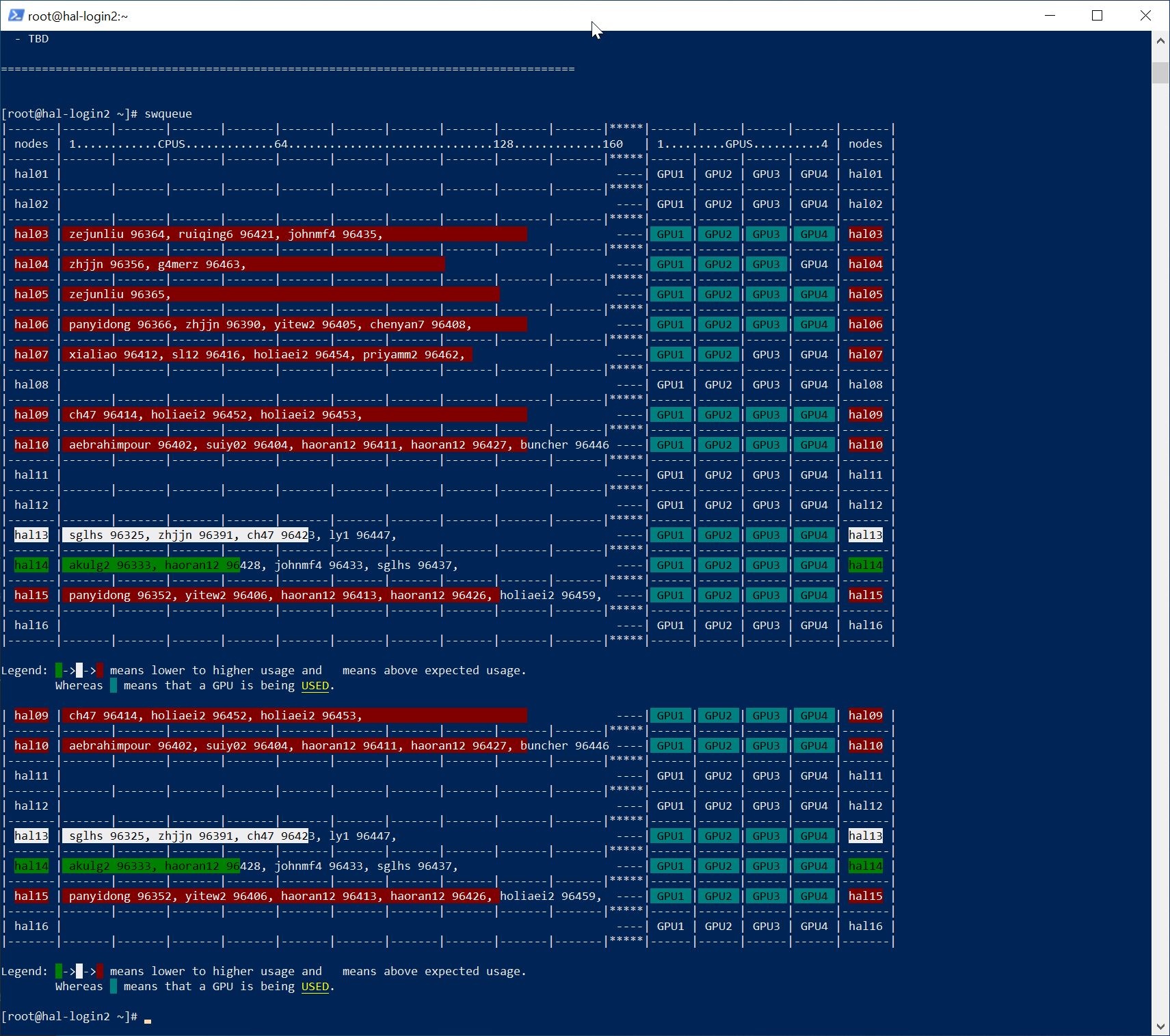

squeue (slurm command) → swqueue : check current running jobs and computational resource status.

| Info |

|---|

The Slurm Wrapper Suite is designed with people new to Slurm in mind and simplifies many aspects of job submission in favor of automation. For advanced use cases, the native Slurm commands are still available for use. |

Rule of Thumb

- Minimize the required input options.

- Consistent with the original "slurm" run-script format.

- Submits job to suitable partition based on the number of GPUs needed (number of nodes for CPU partition).

Usage

| Warning |

|---|

Request Only As Much As You Can Make Use OfMany applications require some amount of modification to make use of more than one GPUs for computation. Almost all programs require nontrivial optimizations to be able to run efficiently on more than one node (partitions gpux8 and larger). Monitor your usage and avoid occupying resources that you cannot make use of. |

- swrun -p <partition_name> -c <cpu_per_gpu> -t <walltime> -r <reservation_name>

- <partition_name> (required) : cpucpun1, cpun2, cpun4, cpun8, gpux1, gpux2, gpux3, gpux4, gpux8, gpux12, gpux16.

- <cpu_per_gpu> (optional) : 12 16 cpus (default), range from 12 16 cpus to 36 40 cpus.

- <walltime> (optional) : 24 4 hours (default), range from 1 hour to 72 hours24 hours in integer format.

- <reservation_name> (optional) : reservation name granted to user.

- example: swrun -q p gpux4 -c 36 40 -t 72 24 (request a full node: 1x node, x4 node4x gpus, 144x 160x cpus, 72x 24x hours)

- Using interactive jobs to run long-running scripts is not recommended. If you are going to walk away from your computer while your script is running, consider submitting a batch job. Unattended interactive sessions can remain idle until they run out of walltime and thus block out resources from other users. We will issue warnings when we find resource-heavy idle interactive sessions and repeated offenses may result in revocation of access rights.

- swbatch <run_script>

- <run_script> (required) : same as original slurm batch.

- <job_name> name> (requiredoptional) : job name.

- <output_file> (requiredoptional) : output file name.

- <error_file> file> (requiredoptional) : error file name.

- <partition_name> (required) : cpucpun1, cpun2, cpun4, cpun8, gpux1, gpux2, gpux3, gpux4, gpux8, gpux12, gpux16.

- <cpu_per_gpu> (optional) : 12 16 cpus (default), range from 12 16 cpus to 36 40 cpus.

- <walltime> (optional) : 24 hours (default), range from 1 hour to 72 24 hours in integer format.

- <reservation_name> (optional) : reservation name granted to user.

example: swbatch demo.sbswb

Code Block language bash title demo.sbswb #!/bin/bash #SBATCH --job-name="demo" #SBATCH --output="demo.%j.%N.out" #SBATCH --error="demo.%j.%N.err" #SBATCH --partition=gpux1 #SBATCH --time=4 srun hostname

- swqueue

- example:

New Job Queues (SWSuite only)

| Info | ||

|---|---|---|

| ||

| Partition Name | Priority | Max Walltime | Nodes Allowed | Min-Max CPUs Per Node Allowed | Min-Max Mem Per Node Allowed | GPU Allowed | Local Scratch | Description | ||

|---|---|---|---|---|---|---|---|---|---|---|

| gpu-debuggpux1 | highnormal | 4 24 hrs | 1 | 1216-14440 | 1819.2-144 48 GB | 41 | none | designed to access 1 GPU on 1 node to run debug jobsequential and/or parallel jobs. | ||

| gpux1gpux2 | normal | 72 24 hrs | 1 | 1232-3680 | 1838.4-54 96 GB | 12 | none | designed to access 1 GPU 2 GPUs on 1 node to run sequential and/or parallel jobjobs. | ||

| gpux2gpux3 | normal | 72 24 hrs | 1 | 2448-72120 | 3657.6-108 144 GB | 23 | none | designed to access 2 3 GPUs on 1 node to run sequential and/or parallel jobjobs. | gpux3||

| gpux4 | normal | 7224 hrs | 1 | 3664- | 108160 | 5476.8-162 192 GB | 34 | none | designed to access | 34 GPUs on 1 node to run sequential and/or parallel jobs. |

| gpux8 | normal | 24 hrs | 2 | 64-160 | 76.8-192 GB | 8 | none | designed to access 8 GPUs on 2 nodes to run sequential and/or parallel | jobjobs. | |

| gpux4gpux12 | normal | 72 24 hrs | 13 | 4864-144160 | 7276.8-216 GB | 4 | 192 GB | 12 | none | designed to access 12 GPUs on 3 nodes to run sequential and/or parallel jobs. |

| gpux16 | normal | 24 hrs | 4 | 64-160 | 76.8-192 GB | 16 | none | designed to access 4 16 GPUs on 1 node 4 nodes to run sequential and/or parallel jobjobs. | ||

| cpucpun1 | normal | 72 24 hrs | 1 | 96-96 | 144-144 115.2-115.2 GB | 0 | none | designed to access 96 CPUs on 1 node to run sequential and/or parallel | jobjobs. | |

| gpux8cpun2 | lownormal | 72 24 hrs | 2 | 4896-14496 | 72-216 115.2-115.2 GB | 80 | none | designed to access 8 GPUs 96 CPUs on 2 nodes to run sequential and/or parallel jobjobs. | ||

| gpux12cpun4 | lownormal | 72 24 hrs | 34 | 4896-14496 | 72-216 115.2-115.2 GB | 120 | none | designed to access 12 GPUs 96 CPUs on 3 4 nodes to run sequential and/or parallel jobjobs. | ||

| gpux16cpun8 | lownormal | 72 24 hrs | 48 | 4896-14496 | 72-216 GB | 16 | 115.2-115.2 GB | 0 | none | designed to access 96 CPUs on 8 nodes to run sequential and/or parallel jobs. |

| cpun16 | normal | 24 hrs | 16 | 96-96 | 115.2-115.2 GB | 0 | none | designed to access 96 CPUs on 16 GPUs on 4 nodes to run sequential and/or parallel jobjobs. | ||

| cpu_mini | normal | 24 hrs | 1 | 8-8 | 9.6-9.6 GB | 0 | none | designed to access 8 CPUs on 1 node to run tensorboard jobs. |

HAL Wrapper Suite Example Job Scripts

...

Script Name | Job Type | Partition | Walltime | Nodes | CPU | GPU | Memory | Description |

|---|---|---|---|---|---|---|---|---|

| run_gpux1_12cpu16cpu_24hrs.sh | interactive | gpux1 | 24 hrs | 1 | 1216 | 1 | 18 19.2 GB | submit interactive job, 1x node for 24 hours w/ 12x CPU 1x GPU task in "gpux1" partition. |

| run_gpux2_24cpu32cpu_24hrs.sh | interactive | gpux2 | 24 hrs | 1 | 2432 | 2 | 36 38.4 GB | submit interactive job, 1x node for 24 hours w/ 24x CPU 2x GPU task in "gpux2" partition. |

| sub_gpux1_12cpu16cpu_24hrs.sbswb | batch | gpux1 | 24 hrs | 1 | 1216 | 1 | 18 19.2 GB | submit batch job, 1x node for 24 hours w/ 12x CPU 1x GPU task in "gpux1" partition. |

| sub_gpux2_24cpu32cpu_24hrs.sbswb | batch | gpux2 | 24 hrs | 1 | 2432 | 2 | 36 38.4 GB | submit batch job, 1x node for 24 hours w/ 24x CPU 2x GPU task in "gpux2" partition. |

| sub_gpux4_48cpu64cpu_24hrs.sbswb | batch | gpux4 | 24 hrs | 1 | 4864 | 4 | 72 76.8 GB | submit batch job, 1x node for 24 hours w/ 48x CPU 4x GPU task in "gpux4" partition. |

| sub_gpux8_96cpu128cpu_24hrs.sbswb | batch | gpux8 | 24 hrs | 2 | 96128 | 8 | 144 153.6 GB | submit batch job, 2x node for 24 hours w/ 96x CPU 8x GPU task in "gpux8" partition. |

| sub_gpux16_192cpu256cpu_24hrs.sbswb | batch | gpux16 | 24 hrs | 4 | 192256 | 16 | 288 153.6 GB | submit batch job, 4x node for 24 hours w/ 192x CPU 16x GPU task in "gpux16" partition. |

Native SLURM style

Available Queues

| Name | Priority | Max Walltime | Max Nodes | Min/Max CPUs | Min/Max RAM | Min/Max GPUs | Description |

|---|---|---|---|---|---|---|---|

| cpu | normal | 24 hrs | 16 | 1-96 | 1.2GB per CPU | 0 | Designed for CPU-only jobs |

| gpu | normal | 24 hrs | 16 | 1-160 | 1.2GB per CPU | 0-64 | Designed for jobs utilizing GPUs |

| debug | high | 4 hrs | 1 | 1-160 | 1.2GB per CPU | 0-4 | Designed for single-node, short jobs. Jobs submitted to this queue receive higher priority than other jobs of the same user. |

Submit Interactive Job with "srun"

| Code Block |

|---|

srun --partition=debug --pty --nodes=1 \ --ntasks-per-node=1216 --cores-per-socket=12=4 \ --threads-per-core=4 --sockets-per-node=1 \ --mem-per-cpu=15001200 --gres=gpu:v100:1 \ -t --time 01:30:00 --wait=0 \ --export=ALL /bin/bash |

Submit Batch Job

...

| Code Block |

|---|

scancel [job_id] # cancel job with [job_id] |

Job Queues

...

Max CPUs

Per Node

...

Max Memory

Per CPU (GB)

...

HAL Example Job Scripts

New users should check the example job scripts at "/opt/apps/samples-runscript" and request adequate resources.

...

Max

Walltime

...

PBS style

Some PBS commands are supported by SLURM.

...