Earlier this week the NCSA Cybersecurity Division hosted a lunchtime security talk. This session covered collection, analysis, and alerting tools used by the Security team. The talk began with security engineer Jacob Gallion giving a summary of the Incident Response Security Team (IRST); who they are and what they do. Later, lead security engineer Chris Clausen presented on the various monitoring services used to monitor network traffic, as well as common security incidents that the team encounters at NCSA.

One of the major takeaways from the presentation is that the security team encourages NCSA staff to use the System Vetting Checklist when setting up new systems to reduce the surface of attack.

Presentation slides: IRST Presentation May 2023.pptx

NCSA will again be participating in the NSF Cybersecurity Summit this year. The 2022 Summit Program includes presentations by NCSA's Jim Basney (Trusted CI Update) and Phuong Cao (A data anonymization proxy for interactive log analyses). Jim is also co-organizing a workshop on Token-based Authentication and Authorization at the Summit. NCSA's Jeannette Dopheide will be emceeing the event.

The Cybersecurity Division (CSD) at the National Center for Supercomputing Applications (NCSA), a department of the University of Illinois at Urbana-Champaign, is hosting a webinar for Illinois K-12 teachers on Tuesday, August 23rd from 4pm - 5pm Central time.

The session will cover various cybersecurity topics, from securing accounts & passwords, multifactor authentication, wifi best practices, data privacy, and email & phishing scams.

Click here to view the video on YouTube

Click here to download a PDF of the slides

For questions about the presentation, or security in general, contact help+security@ncsa.illinois.edu.

To learn more about security at NCSA, see our website, our Github, and follow us on Twitter at @NCSASecurity.

NCSA staff members and affiliates will again be participating in the NSF Cybersecurity Summit this year.

The 2021 Summit Program includes presentations by the following NCSA people (names in bold):

Finding the sweet spot: How much security is needed for NSF projects? (Panelists: Rebecca L. Keiser, Alex Withers, Pamela Nigro, Everly Health | Moderator: Anita Nikolich)

SciAuth: Deploying Interoperable and Usable Authorization Tokens to Enable Scientific Collaborations (Jim Basney, Brian Bockelman, Derek Weitzel)

Observations on Software Assurance in Scientific Computing (Andrew Adams, Kay Avila, Elisa Heymann, Sean Peisert)

Ransomware Honeypot (Phuong Cao)

NCSA's Jim Basney is also co-organizing two co-located workshops at the Summit

NCSA's John Zage will again be contributing to the Summit report, which is published after the event. Last year's report is published at https://hdl.handle.net/2142/108907.

NCSA's CILogon team will be participating in InCommon CAMP Week October 4-8, 2021. The meeting will gather the identity and access management (IAM) community to discuss the latest advancements in federated authentication, identity assurance, supporting NSF and NIH researchers, and many other IAM topics. NCSA's Jim Basney will be representing the CILogon project on the closing plenary panel about "Strategies to Enable Federated Access to SAML-shy Resources and Services" on Tuesday, October 5 at 1pm CDT.

The CAMP program will also include presentations from other IAM experts from the University of Illinois, including Eric Coleman, Tracy Tolliver, and Keith Wessel, covering topics such as "Library Access of the Future", "A story of collaboration to help protect campuses from COVID-19" (using the Safer Illinois app), "Linking multiple SSO Systems for a Better User Experience", and "Splunk and Advanced Log Analysis".

This blog post was originally published by Trusted CI.

NOTE: If you have any experience with SOC2 compliance and want to share resources, slideshows, presentations, etc., please email links and other materials to Jeannette Dopheide <jdopheid@illinois.edu> and we will share them during the presentation.

NCSA's Alex Withers is presenting the talk, NCSA Experience with SOC2 in the Research Computing Space, on Monday August 30th at 11am (Eastern).

Please register here.

As the demand for research computing dealing with sensitive data increases, institutions like the National Center for Supercomputing Applications work to build the infrastructure that can process and store these types of data. Along with the infrastructure can come a host of regulatory obligations including auditing and examination requirements. We will present NCSA’s recent SOC2 examination of its healthcare computing infrastructure and how we ensured our controls, data collection and processes were properly documented, tested and poised for the examination. Additionally, we will show how other research and educational organizations might handle a SOC2 examination and what to expect from such an examination. From a broader perspective, the techniques and lessons learned can be applied to much more than a SOC2 examination and could potentially be used to save time and resources for any audit or examination.

Speaker Bio:

Alex Withers is an Assistant Director for Cyber Security and the Chief Information Security Officer at the National Center for Supercomputing Applications (NCSA). Additionally, he is the security co-manager for the XSEDE project and NCSA’s HIPAA Security Liaison. He is also a PI and co-PI for a number of NSF-funded cybersecurity projects.

Join Trusted CI's announcements mailing list for information about upcoming events. To submit topics or requests to present, see our call for presentations. Archived presentations are available on our site under "Past Events."

In this installment of NCSA’s blog series on SOC2 we will discuss how we’ve implemented our SOC2 Internal Testing Workflow. NCSA uses Atlassian’s JIRA product for a variety of purposes ranging from IT incident tracking to project management. It’s general design as a workflow framework suited it for use as our internal testing workflow for tracking and testing our controls. There are a variety of products available specifically for this purpose, however the cost and lack of knowledge and resources required to deploy these solutions required us to leverage our existing JIRA system.

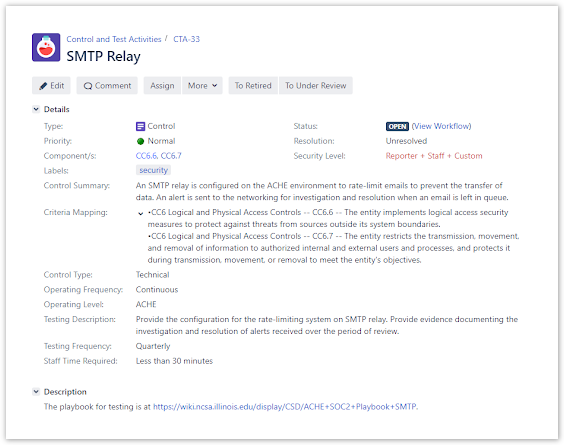

The best way to illustrate how we use JIRA to track and test our controls is to provide an example of one of these controls. The first control is a simple control that requires outbound SMTP be rate limited on the SMTP relay:

This snapshot of the JIRA issue tracking the SMTP rate limit control has several fields that should be pointed out and explained. The “Control Summary” field describes briefly our control. The “Criteria Mapping” shows which Trust Services Criteria our control is mapped onto. In this particular example it is mapped onto CC6.6 and CC6.7 of the Trust Services Criteria:

| CC6.6 | The entity implements logical access security measures to protect against threats from sources outside its system boundaries. |

| CC6.7 | The entity restricts the transmission, movement, and removal of information to authorized internal and external users and processes, and protects it during transmission, movement, or removal to meet the entity’s objectives. |

Recall that this mapping is part of NCSA’s system description document provided during the SOC2 examination. During the examination this control, along with the others, is examined for the suitability of design and operating effectiveness of the controls in the system description. The “Components” field provides a reference that collects and displays all other NCSA controls mapped to Trust Services Criteria CC6.6 and CC6.7. This allows us to quickly identify and examine all other test controls for those specific criteria.

The other fields towards the bottom of the JIRA issue describe the control type, operating/testing frequency, and time required to complete the test. These fields are specific to our internal testing workflow and have nothing to do with SOC2, but do allow us to understand both the level of effort the testing takes and how often the control should be tested.

Lastly, the description of this JIRA issue links to a playbook. Because the control is a “Technical” Control Type, the playbook is written and maintained by the administrators who administer the SMTP relay of which this control is the focus. The playbook provides a step-by-step procedure for testing the rate limiting and alerting capabilities of the SMTP relay and how that test’s outcome can be captured. The playbook is written in such a manner as to allow anyone authorized administrator to perform the test.

Below is a snippet of the playbook document.

When this test is performed, the JIRA issue for this control implements the workflow described in the previous section:

This creates a JIRA sub-issue and places the control “In Review”. Assuming the test went well, evidence of a satisfactory test of the SMTP relay is attached to this sub-issue and it closed placing the control back into it’s open state.

In some circumstances, the evidence attached to the sub-issue can be used during a SOC2 examination. But more importantly, this internal test workflow ensures that, at any given time, we can demonstrate the operating effectiveness of our controls. Periodic testing allows us to ensure that we’re collecting the proper evidence, it ensures that the control is working and it allows us to identify inefficiencies that may consume more staff time than is necessary.

It should be noted that there are drawbacks to using JIRA for implementing this type of internal test workflow. JIRA is an issue tracking product at its core and is not designed for these types of looped workflows. JIRA also does not provide good options for managing or changing an issue’s state based on a time cadence. Options do exist by implementing this feature externally through an API but that assumes proper developer and JIRA know-how. Also, while JIRA does provide for extensive customization of an issue’s fields, there are many fields that are immutable. Take for example the “Resolution” field in SMTP relay control above. It is set to “Unresolved” which can be confusing for an issue that is currently in the “Monitor” state waiting to be tested. In order to overcome these drawbacks, a fair amount of manual intervention is needed to monitor and shepherd the issues through the internal test workflow. Additionally, documentation and training materials have to be created and maintained if staff are expected to be assigned these control tests and execute them properly.

The Cybersecurity Division at NCSA continues to grow! Over the past six months we have added three more members to our team.

Ryan Walker is a Sr. Security Engineer who previously worked for both the University security and networking teams. He is interested in combining the seemingly disparate ideas of enabling connectivity and securing information. Ryan says he was an avid reader and traveler, though recently he spends much of his free time playing in his backyard with his family. (Editor's Note: We hope Ryan's travel plans open a bit more over the summer.)

Jacob Gallion is a Security Engineer who returned to NCSA after previously serving as a student intern with the Incident Response and Security Team. He received his cybersecurity degree at Illinois State, just before the COVID shut down began. When asked what he likes about cybersecurity as a career path, he said he enjoys learning the many aspects of IT to further his understanding of cybersecurity.

Phuong Cao is a Student Research Scientist who is currently finishing his PhD in Electrical & Computer Engineering. Previously he worked at several research labs (IBM, Microsoft) and companies (LinkedIn, Akamai). He has experience in statistical data analyses, machine learning, and formal methods applied to critical problems in security such as reverse engineering malware, detecting attacks using probabilistic graphical models, and verifying correctness of smart contracts. Phuong says he enjoys helping secure NCSA infrastructure while producing novel security research. Currently, Phuong is evaluating factor graph-based attack detection on XSEDE logs, analyzing RDP logs, and verifying security policies in federated authentication.

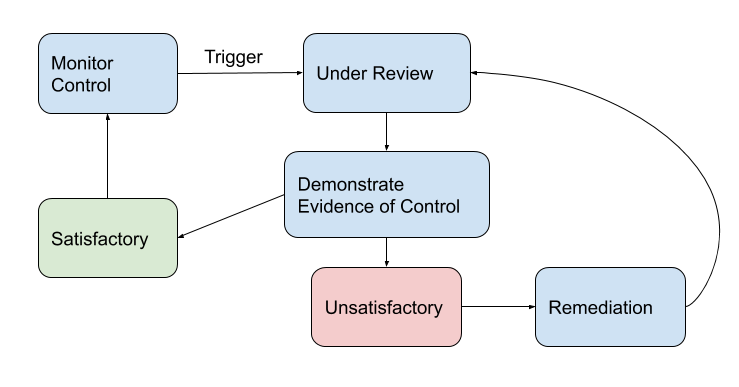

As described in our previous blog post, our approach to SOC 2 relies on establishing internal processes, building a platform to manage our controls and tests, the participation of our staff to implement the controls, and provide evidence of its operation. We have designed a compliance and internal auditing workflow to manage the lifecycle of controls and testing activities. This workflow is depicted in the figure below.

All controls begin in the state “monitor,” meaning they are designed to meet requirements, are documented, and expected to be operating as designed. Control Owners are responsible for ensuring that controls are being monitored. At certain times, a trigger moves a control into a review state, indicating that the control is ready for an internal test. A trigger might be an established periodic testing frequency, such as a quarterly review; a formal change to the system that affects the design of a control; a remediation of an incident that indicates a control is not operating as expected; or a third-party evaluation, such as the SOC 2 assessment.

A control under review initiates a test activity, which is a request for demonstration that the control is operating as expected. Test Owners execute the test and provide evidence of operation. When a test is complete, the Control Owner reviews the results of the test.

If the results sufficiently demonstrate the control is operating as expected and fulfills the scope of the control, the test is deemed “satisfactory.” The test activity is resolved and the control is moved back to the monitor state until the next test activity is triggered.

However, if the results are insufficient, then the test is marked “unsatisfactory.” A test could prove unsatisfactory for a variety of reasons; for example, if the instructions for demonstration are incomplete or the control is not implemented properly. In this case, the system is considered to be operating out of compliance with the control requirements and a remediation is needed. The Control Owner works with the Test Owner and other staff to plan and implement the remediation activity.

When the remediation is completed, another test activity is performed to demonstrate that the control is now operating as expected. The workflow repeats: the control is moved to “under review,” and a test is initiated, executed by the Test Owner, and reviewed by the Control Owner. Once the test results are satisfactory, the control is moved back to "monitor."

This simple workflow allows us to manage continuous internal testing and specify staff roles and responsibilities in the compliance management process. More importantly, when we undergo a SOC 2 evaluation we will be able to rely on this workflow and the evidence collected from all of the previous tests.

As previously discussed, NCSA’s Advanced Computing Healthcare Enclave is undergoing a SOC 2 examination. This blog series will document our experiences, lessons learned and best practices.

During the initial drafting of our narrative and controls it became quickly apparent that a SOC 2 examination cannot be limited to security and policy staff. You will not have an accurate narrative of our system and organizational controls without the input, knowledge and experience of the entire organization. To ensure that everyone was on board and providing the information and input we needed, we assigned broad control categories to division heads and/or group leaders as appropriate. Collecting the controls and processes involved both security policy staff and the control group assignees. Moreover, this would also involve the individual staff who were most familiar with the implementation of these controls and processes, and the security staff who ensured that they were properly implemented.

Further, each control needs to be regularly tested. The reason for this is two-fold. First, internal monitoring provides assurance that our controls are operating as expected, and that we remain in compliance and mitigate risks. Second, the outputs of regular testing create a repository of documented evidence that controls are operating. This is especially necessary for the Type 2 examination, which assesses how controls are operating over a period of time. Collecting the evidence throughout the review period avoids a last-minute collection of documentation for all controls at the end of the review. The frequency of testing a particular control depends on how often the control operates (continuously, monthly, annually, etc.), availability of resources to perform the tests, and our risk tolerance. For example, we may choose to test newer or more complex controls more often than well-established controls we can better trust are operating.

Due to the sheer number of controls and staff, coordinating overall responsibilities and area ownership can very quickly get out of hand. NCSA’s approach evolved gradually from simple spreadsheets to collaborative tables in a wiki space to a custom GRC tool (of which more will be discussed in future posts). An example spreadsheet entry would look like:

While this could easily be done in a collaborative tool like Google Spreadsheets, it becomes difficult over time to track ownership, testing, and status of the controls. One reason is that it relies on either the control owner or tester to maintain the status of their respective controls within the spreadsheet. Another major factor is that spreadsheets do not enforce a workflow. For example, if a control is being tested and the test fails, then we must rely on the editor to properly annotate that control and set its status appropriately.

Another tool that can be used to track controls and processes is a collaborative wiki tool such as Atlassian’s Confluence. While a tool such as Confluence provides good integration with documentation for a control catalog, it only alleviates some of the problems described above. In the end it places the primary burden on the staff to manage their respective controls, which can lead to a messy situation in which controls are not properly tested or documented.

In the next post we’ll outline a workflow for how we’ve managed our controls and processes being examined during our SOC 2 Type 2 phase.

NCSA’s Advanced Computational Health Enclave (ACHE) is a multi-tenant environment providing high-performance computing (HPC) for research involving electronic Protected Health Information (ePHI). NCSA follows HIPAA standards and has implemented a set of security controls to ensure the protection of ePHI.

As a validation of our controls, NCSA is pursuing a SOC 2 Type 2 certification for its ACHE environment. A SOC 2 is an assessment of a service organization’s system and organizational controls. These internal controls, including policies, business processes, and technical controls, are assessed according to one or more of the AICPA’s Trust Service Criteria for Security, Availability, Processing Integrity, Confidentiality, and Privacy.

To become SOC 2 Type 2 certified, a service organization undergoes a third-party auditing procedure which examines both the design and operating effectiveness of the controls. During the evaluation, the service organization presents evidence that controls are well-designed, sufficiently meet the relevant Trust Service Criteria, and are operating as expected over time.

In this first certification process NCSA will be evaluated over a six-month period. Beginning July 1, 2020, we are documenting our controls, periodically testing them, collecting operational evidence, and mitigating non-compliance, following a methodology similar to internal compliance management. In this blog series we will describe the systems and methods we have set up including how we keep track of our controls, how we test our controls, and workflows we have configured to manage evidence collection. As we go through the attestation process we will also note lessons learned and how we will adjust our processes in the future.

Why do kitchen gadgets appear on Facebook after looking up recipes online, or ads for running shoes pop up after searching for new training tips? How is it that the Internet seems to know so much about our interests? These kinds of experiences are due to the ubiquitous and much less tasty cousin of the chocolate chip cookie: the web cookie. This talk will cover the basic concepts behind cookies, the upside of how they can be beneficial to you, and the downside of how they're also used to track your web browsing habits to tailor marketing. Finally, suggestions will be provided for taking back some privacy and control. This talk is presented by Kay Avila, Sr. Security Engineer at NCSA. This presentation will be recorded.

Slides: ChipsAnnoy-KayAvila-2020Aug18.pdf

Learn more about CSD: security.ncsa.illinois.edu

Learn more about NCSA: ncsa.illinois.edu

Members of NCSA's Cybersecurity Directorate (CSD) will be presenting a workshop, a paper, and two posters at PEARC20. Below is a summary of our activities, we hope you join us for them.

- The Fourth Workshop on Trustworthy Scientific Cyberinfrastructure, hosted by Trusted CI, the NSF Cybersecurity Center for Excellence. The workshop provides an opportunity for sharing experiences, recommendations, and solutions for addressing cybersecurity challenges in research computing. NCSA is a member of Trusted CI.

- The workshop will be held Monday, July 27 from 10:00am to 2:00pm, Central Time (PEARC event page)

- Jim Basney, Jeannette Dopheide, Kay Avila will be presenting the results of the Trustworthy Data Working Group survey on scientific data security concerns and practices.

Phuong Cao, Satvik Kulkarni, Alex Withers, and Chris Clausen will be presenting an analysis of attacks targeting remote workers and scientific computing infrastructure during the COVID19 pandemic at NCSA/UIUC.

- The workshop will be recorded and slides will be published on the workshop web site.

- The workshop will be held Monday, July 27 from 10:00am to 2:00pm, Central Time (PEARC event page)

- The tutorial, The Streetwise Guide to Jupyter Security, will be co-presented by Kay Avila

- The tutorial will be held on Monday, July 27 from 3:00pm - 7:00pm Central time (PEARC event page)

The paper, Custos: Security Middleware for Science Gateways, written by Jim Basney, Terry Fleury, Jeff Gaynor, and their colleagues on the Custos project

- The paper will be presented on Wednesday, July 29 from 3:35pm - 5:35pm, Central time (PEARC event page)

Poster session:

- The poster session will be held Tuesday, July 28 from 10:00am to 7:00pm, Central time (PEARC event page)

NCSA student You (Alex) Gao will be presenting the poster, SciTokens SSH: Token-based Authentication for Remote Login to Scientific Computing Environments

- Poster (Brella login required)

Jim Basney will be co-presenting a poster with his XSEDE colleagues, Use Case Methodology in XSEDE System Integration

- Poster (Brella login required)

“It’s my pleasure to welcome Scott Koranda back to NCSA as a part-time senior research scientist in our cyberinfrastructure security research (CISR) group,” said Jim Basney, who leads the CISR group in NCSA’s Cybersecurity Division. Scott worked at NCSA back in the late 90s as part of NCSA’s HPC consulting group, before he left NCSA to join LIGO. Since then, Scott has been a senior scientist at the University of Wisconsin-Milwaukee (UWM) and a partner at Spherical Cow Group (SCG). Scott will continue his work with UWM and SCG while joining NCSA part-time.

Scott brings a wealth of experience and expertise to NCSA on the topic of identity and access management (IAM) for international science projects. Jim and Scott were PI and co-PI on CILogon 2.0, an NSF funded project to add the collaboration management capabilities of COmanage to NCSA’s CILogon service, resulting in an integrated IAM platform for science projects that NCSA now offers as a non-profit subscription service. Scott’s new role at NCSA enables him to devote more attention to the support and growth of the CILogon service.

“Scott is a very valuable addition to our team and really grows NCSA’s expertise in cybersecurity and identity management research,” said Alex Withers, who is NCSA’s chief information security officer and the manager of NCSA’s Cybersecurity Division. Jim added, “I met Scott back when he was at NCSA and I was a graduate student on the HTCondor project, and he’s been a valued research collaborator of mine ever since. Our ability to recruit Scott back to NCSA is a testament to our shared vision for CILogon’s growing role in meeting the IAM needs of research collaborations to enable scientific discovery."

NCSA's cybersecurity group has multiple open positions. Join our team to work on cutting edge software and projects that help secure cyberinfrastructure for national and international science and engineering research communities. To view the job postings and apply, click the corresponding links below.

- Security Engineer / Senior Security Engineer: https://jobs.illinois.edu/academic-job-board/job-details?jobID=129004

- Research Scientist / Senior Research Scientist: https://jobs.illinois.edu/academic-job-board/job-details?jobID=111608