...

The Workflow Broker (formerly "Ensemble Broker") is a GSI web service designed to orchestrate and manage complex sequences of large computational jobs on production hostsresources.

Features

- Expressive (XML) description logic which allows for

- Structuring workflow in two independent layers:

- Top level (broker-centric): a directed acyclical graph of executable nodes;

- Individual node payloads: scripts to be run on the production resource, usually inside an Elf container;

- Configuration of scheduling and execution properties on a node-by-node basis;

- Structuring workflow in two independent layers:

- Integration with scheduling systems, such as MOAB;

- (Potentially recursive) service-side parameterized expansion of nodes (done lazily, as the nodes become ready to run);

- Fully asynchronous staging, submission and monitoring of jobs;

- State management through a persistent object-relational layer (Hibernate);

- Inspection of workflow state enabled via event bus as well as API calls on the borker service itself;

- Cancellation and restart of nodes or entire workflows.

...

Condor has very nice scalability, especially when managing a Condor pool (usually of single processor systems). It also has an expressive language for describing DAGs of jobs to be executed remotely. In its Condor-G flavor, though – which opens the (necessary) door to parallel jobs – , it suffers from scalability and reliability concerns that arise from using GRAM, and which our broker can circumvent by virtue of its native-batch-system protocol option. Also, since Condor does not automatically provide a cluster-local component (i.e., our script container), it is not possible – in the absence of ad hoc scripting and logging mechanisms – , to obtain finer grained monitoring of remote jobs than what the resource manager and GRAM tell you about them.

Neither of these alternatives has a readily available, generalized mechanism capable of handling parameterization of workflows.

Interactions

This service sends and receives events via a JMS message bus, and acts as a client to the Host Information Service in order to configure the payloads to run on specific resources. For its relative position in the general client-service layout, see the infrastructure diagram; a slightly more detailed look at the service topology is illustrated by an interaction diagram for a typical simple submission.

...

In order to run through the broker, a Workflow Description must be created and submitted (refer to the Siege pages Tutorial for a walk-through of this process). This description in turn will contain one or more scripted payloads, which can run from trivial to very complex, depending on the nature of the work to be accomplished through a single job launched onto the host; however, no special changes or recompilation of the underlying computational codes is necessary.

Siege also allows the user to inspect the state of workflows which have been or are being processed and to view all the events published in connection with them; this is particularly useful for debugging purposes. Once again this can be done entirely asynchronously (i.e., one can disconnect and then, upon reconnecting, retrieve a historical view of the state of the workflow). The level or granularity of information remotely propagated over the event bus can be controlled by the user on a workflow-by-workflow basis.

Design

Interfaces

API

Service WSDL

The broker API, which includes both workflow-management as well as administrative methods, is as follows:

| Code Block |

|---|

public interface IBrokerCore

{

/**

* @param submission description of workflow to submit.

* @return handle containing identifiers for workflow

*/

public SubmissionHandle submit( BrokerSubmission submission );

/**

* @param sessionId should uniquely identify the node to this service.

* @param status the state of the node.

*/

public void updateNodeStatus( Integer sessionId, String status, Property[] output );

public void archiveWorkflowsTo( String archiveURI, String configurationXml );

/**

* @param id the primary key of the workflow object, not the submission

*/

public String getDescriptorAsXml( Integer id );

public String getWorkflowAsXml( Integer id );

public String getNodeAsXml( Integer id );

public AggregateSummary getStateForUser( String user );

public StatusInfo[] getStatusInfoForUser( String user, Integer lastId );

public StatusInfo getStatusInfoForId( String globalId );

public StatusInfo[] getStatusInfo( Integer lastId );

public void deleteAllForUser( String user );

}

/*

* Administrative methods.

*/

public interface IBroker extends IBrokerCore

{

public UserSubmissions[] getUserInfo();

public AggregateSummary getServiceState();

public void cancel( String globalId );

public void restart( String globalId );

public void deleteAllCompleted();

public void deleteAllBefore( Long time );

public void delete( String globalId );

}

|

See further BrokerSubmission for a description of the submission objects. (We leave out discussion of the administrative query return types, since in most cases querying will take place through Siege, and those objects will remain hidden from the user; their schematic representation can nonetheless be obtained by inspecting the Broker WSDL.)Refer to API.

Implementation

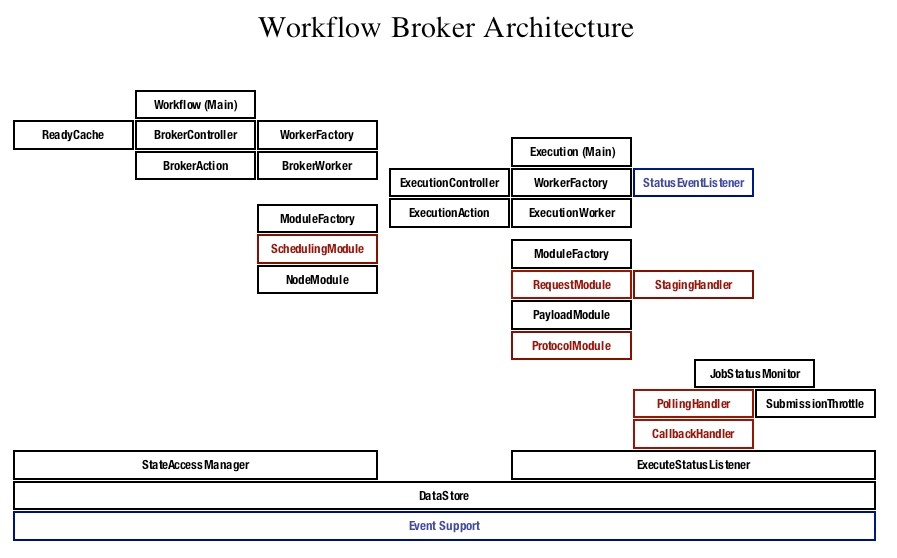

(Red signifies a component which makes external calls, either to web-service ports, file-transfer mechanisms protocols or remote execution ssh).

The workflow broker consists of three functional units:

...

- Underlying each unit is a "controller", consisting of a queue and a subscriber. Actions are placed on the queue, and the subscriber delegates them to appropriate workers.

- An action is typed, corresponding to some state associated with the workflow or the job (e.g., Workflow Submitted, Node Completed, etc.), and contains (minimally) an id pointing to the workflow or part of the workflow to be acted upon.

- A worker is typed in accordance with the action it is capable of handling, and is threaded.

- The subscriber uses a factory (Spring) to match the worker to the action, then makes a request to a threaded-worker-pool in order to run the worker.

...