NCSA Workflow Management Infrastructure (as of October 21, 2009)

Wiki Markup

Wiki Markup - the graph itself is directed and acyclic, meaning there are no conditionals or loops at this level (however, conditional or repeated submissions of a workflow with varying values can be achieved through the use of the Trigger service, described below);

- any node or subgraph in the workflow could be subject to "parameterization", meaning multiple submissions with varying input values.

...

- a front-end desktop client, Siege;

Wiki Markup - information and data transfer services (*Host Information*, *Event Repository*, *Tuple Space \ [VIZIER\]*);

- a transient, compute-resource-resident container for running the payload scripts (ELF);

- a service for triggering actions, most typically submissions of workflows to PWE, on the basis of events or as cron jobs;

- a message bus.

...

- Submission Protocol Module

Wiki Markup - IBM Loadlevler - for submitting (again via \ [GSI\]SSH) to the LL Scheduler.

- Job Status Handler

- unmigrated-wiki-markup

- AIX Polling Handler - for monitoring the status of interactively submitted jobs (polls the head node via \ [GSI\]SSH using 'ps');

- IBM Loadlevler Job Status Handler - this is just a façade for receiving the callback events sent by the User Exit Trigger (see below).

...

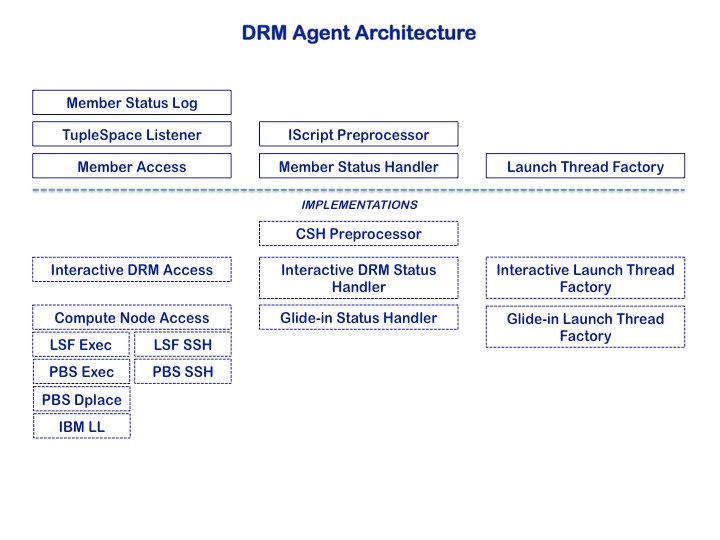

There are actually two kinds of DRM Agent, as indicated above; the Interactive Manager is akin to the pre-WS Globus job-manager, in that it is meant to run on a head node of a system where back-end Java is unavailable, and monitors arbitrary jobs (not necessarily ELF) put through the resident batch system. Our concern here, however, is with the second type of manager, which is itself a job (step) submitted to the scheduler or resource manager, and which, when it becomes active, distributes the work among the given number of ELF singleton members. Similar to PWE internals, this agent has a system-specific component, as can be seen from the following diagram of the agent's architecture.

Wiki Markup

- creating a "nodefile" from the queue of available cores/cpus

- generating the necessary

bootstrap.propertiesandcontainer.xmlfiles for ELF to run the member script - generating a command file (bash) to be used in conjunction with member launch

- releasing the resources when the member has completed

- issuing an appropriate Cancel command when necessary

...

This native C wrapper around LoadLeveler's API serves two purposes:

Wiki Markup - To provide to LoadLeveler the arguments necessary for reporting job state back to PWE (i.e., the "User Exit" parameters in the llsubmit signature).

...

- the job command file is created with this name;

- the command-line arguments are translated into LoadLeveler's job properties pragmas (using the '# @ property = value' syntax; the current environment is also passed on to the job step using '# @ environment=COPY_ALL');

- the stdin redirected to this wrapper is written to the file as its contents;

- a properties file is created using the job command name as prefix; this file contains arguments necessary for the callback trigger;

- llsubmit is called using the job command file, the path to the trigger script (see below), and the properties file as arguments;

- if the call is successful, the job step id is returned to PWE on stdout.

...

4.2

...

PWE

...

Notification

...

Agent

...

[=

...

LLUserExitTrigger

...

]

This is the Java trigger called by LoadLeveler to report edge events ("User Exit"). It is implemented as another RCP headless application. Though designed specifically for this purpose, we have nonetheless made the LoadLeveler implementation a concrete instance of an abstract class, PweNotificationAgent.

...